Tensorflow迁移学习

展开阅读

展开阅读

首先,为了方便划分不同的网络模块(子网络)、分别导入权重、指定是否需要训练、指定是否需要复用,需要使用如下语句为网络权重设定scope;使用collection将隐含层输出保存为字典;在with xxx:语句内部定义的网络层也要定义scope

import tensorflow as tf

import tensorflow.contrib.slim as slim

# 需要Reuse时,设定reuse=tf.AUTO_REUSE

with tf.variable_scope("model_name", "model_name", reuse=tf.AUTO_REUSE) as sc:

# 之后就可以在下面定义网络了

conv1 = slim.conv2d(input, 32, [3, 3], rate=1, activation_fn=lrelu, scope='layer_name')

# 还可以使用end_points字典保存隐含层输出

end_points = slim.utils.convert_collection_to_dict("collection_name")

end_points[sc.name + "/layer_name'] = conv1然后,在优化器优化代码处定义需要训练的scope

loss = #定义好你的loss

# 从scope获得需要训练的变量表

train_vars = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, "model_name")

# 将var_list设定为刚才获得的变量表

opt = tf.train.AdamOptimizer(learning_rate=1e-5).minimize(loss, var_list=train_vars)最后,导入预训练的权重

# 导入权重前要进行变量初始化

sess.run(tf.global_variables_initializer())

# 错误?导入预训练的权重(导入后会被初始化覆盖?)

# tf.train.init_from_checkpoint("model_name.ckpt", {"model_name/":"model_name/"})

# 更好的导入权重的方法

saver = tf.train.Saver(tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, "model_name"))

saver_sid.restore(sess, "model_name.ckpt") 展开阅读

import os

import tensorflow as tf

import numpy as np

def mkdir(result_dir):

if not os.path.isdir(result_dir):

os.makedirs(result_dir)

return

def load_ckpt_initialize(checkpoint_dir, sess):

saver = tf.train.Saver()

sess.run(tf.global_variables_initializer())

ckpt = tf.train.get_checkpoint_state(checkpoint_dir)

if ckpt:

print('loaded ' + ckpt.model_checkpoint_path)

saver.restore(sess, ckpt.model_checkpoint_path)

return saver

def sobel_loss(img1,img2):

# sobel边缘检测后计算L1 loss,本来是为了防止模糊化,但似乎有一些问题,网络会去学到不存在的边缘

edge_1 = tf.math.abs(tf.image.sobel_edges(img1))

edge_2 = tf.math.abs(tf.image.sobel_edges(img2))

m_1 = tf.reduce_mean(edge_1)

m_2 = tf.reduce_mean(edge_2)

edge_bin_1 = tf.cast(edge_1>m_1, tf.float32)

edge_bin_2 = tf.cast(edge_2>m_2, tf.float32)

return tf.reduce_mean(tf.math.abs(edge_bin_1-edge_bin_2))

def load_image_to_memory(image_dir):

# 将image_dir下的所有图片加载到内存,存到一个list里

if image_dir[-1]!="/" and image_dir[-2:]!="\\":

print("invalid dir")

return -1

image_pack=[]

for i in os.listdir(image_dir):

image_tmp = plt.imread(image_dir + i)

image_pack.append(image_tmp)

return image_pack

def generate_batch(train_pics,batch_size,dark_pack,gt_pack):

"""

:argument

train_pics: 图片个数

batch_size: int

dark_pack: list, appended [h,w,c] 图片(nparray), train_pics 个元素

gt_pack: list, appended [h,w,c] 图片(nparray), train_pics个元素

:returns

nparray [batch_size, h,w,c] 随机截取的一个batch

"""

input_patches = []

gt_patches = []

for ind in np.random.permutation(train_pics)[:batch_size]:

imgrgb=dark_pack[ind]

imggt = gt_pack[ind]

W = imgrgb.shape[0]

H = imgrgb.shape[1]

ps = max(min(W//4,H//4),8)

xx = np.random.randint(0, W - ps)

yy = np.random.randint(0, H - ps)

img_feed_in = imgrgb[np.newaxis,:,:,:]

img_feed_gt = imggt[np.newaxis,:,:,:]

# random crop flip to generate patches

input_patch = img_feed_in[:, xx:xx + ps, yy:yy + ps, :]

gt_patch = img_feed_gt[:, xx * 2:xx * 2 + ps * 2, yy * 2:yy * 2 + ps * 2, :]

if np.random.randint(2, size=1)[0] == 1: # random flip

input_patch = np.flip(input_patch, axis=1)

gt_patch = np.flip(gt_patch, axis=1)

if np.random.randint(2, size=1)[0] == 1:

input_patch = np.flip(input_patch, axis=2)

gt_patch = np.flip(gt_patch, axis=2)

if np.random.randint(2, size=1)[0] == 1: # random transpose

input_patch = np.transpose(input_patch, (0, 2, 1, 3))

gt_patch = np.transpose(gt_patch, (0, 2, 1, 3))

input_patch = np.minimum(input_patch, 1.0)

input_patches.append(input_patch)

gt_patches.append(gt_patch)

return np.concatenate(input_patches,0),np.concatenate(gt_patches,0)

def cov(x,y):

# NHWC格式图像的协方差covariance

mshape = x.shape

#n,h,w,c

x_bar = tf.reduce_mean(x, axis=[1,2,3])

y_bar = tf.reduce_mean(y, axis=[1,2,3])

x_bar = tf.einsum("i,jkl->ijkl",x_bar,tf.ones_like(x[0,:,:,:]))

y_bar = tf.einsum("i,jkl->ijkl",y_bar,tf.ones_like(x[0,:,:,:]))

return tf.reduce_mean((x-x_bar)*(y-y_bar), [1,2,3])

def cumsum(xs):

# tensorflow version "np.cumsum"

values = tf.unstack(xs)

out = []

prev = tf.zeros_like(values[0])

for val in values:

s = prev + val

out.append(s)

prev = s

result = tf.stack(out)

return result

def piecewise_linear_fn(x, x1,x2,y1,y2):

# 分段线性插值

return tf.where(tf.logical_or(tf.less(x,x1), tf.greater(x,x2)),

tf.constant(0.0,shape=[1]),

y1 + (y2-y1)/(x2-x1)*(x-x1))

def count(matrix, minval, maxval):

# 计数 count(minval< matrix < maxval)

return tf.reduce_sum(tf.where( tf.logical_and(tf.greater(matrix, minval), tf.less(matrix, maxval)),

tf.ones_like(matrix, dtype=tf.float32),

tf.zeros_like(matrix, dtype=tf.float32)))

def generate_opt(loss):

lr = tf.placeholder(tf.float32)

opt = tf.train.AdamOptimizer(learning_rate=lr).minimize(loss)

return opt, lr

def generate_weights(shape):

weights = tf.Variable(tf.random_uniform(shape=shape,minval=0.0,maxval=1.0))

return weights展开阅读

使用Tensorflow/Keras中在不同的网络层之间传递的tensor对象的eval()方法进行调试,在一个Tensorflow Session中,使用tf.global_variables_initializer()以初始化之前定义的tensor对象。之后就可以对之前定义的全局变量进行更改,以对网络的输入进行自定义,并使用eval()方法观察层之间的输出值了。

input_test = X[0],Y[0] #从输入数据中截取的测试片段

output_test = model(

[tf.convert_to_tensor(input_test[0][0],float),

tf.convert_to_tensor(input_test[0][1],float)]

) #输入网络,得到最终输出

loss = my_loss(tf.convert_to_tensor(input_test[1],float), output_test)

test_x1=tf.convert_to_tensor([[1,2],[2,3]],float) #对my_layer层的测试输入,第一个参数

test_x2=tf.convert_to_tensor([[2,1],[4,3]],float) #对my_layer层的测试输入,第二个参数

test_simulation = my_layer([test_x1,test_x2]) #计算测试结果

print(test_simulation.shape) #不用启动session也可以输入中间层的形状

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print(test_simulation.eval())

print(input_test[1],output_test.eval())

print(loss.eval())TypeError: Input 'b' of 'MatMul' Op has type float32 that does not match

type float64 of argument 'a'.这种错误是可能是由于输入给模型(或函数)的tensor数据类型不是正常的float类型,可以将错误的tensor输入给函数tf.convert_to_tensor()以得到正常的tensor,其中函数convert_to_tensor的dtype参数应设置为np.float32或float(不怕麻烦的话可以把所有的tensor都设置一遍,肯定能解决这个问题)。如下例所示:

input = tf.convert_to_tensor(input,np.float32)ValueError: An operation has `None` for gradient. Please make sure that all of your

ops have a gradient defined (i.e. are differentiable). Common ops without gradient:

K.argmax, K.round, K.eval.出现这种情况时一般是自己定义的Loss函数出了问题

ValueError: Error when checking target: expected dense to have 3 dimensions,

but got array with shape (1, 1)这种错误一般是由于输入张量数据和类别标签的张量维数(形状/shape)与网络结构的输入层或输出层不匹配导致的。比如在使用model.fit_generator()对训练数据进行拟合时,如果输入给这个函数的generator参数定义为如下形式:

X=[1,2,3]

Y=[1,2,3]

def generator(X,Y):

while True:

for i in range(len(Y)):

yield [[[[X[i]]]],[[[[Y[i]]]]

model.fit_generator(generator(X,Y), steps_per_epoch=1, epochs=10, verbose=1)那么generator输出的训练数据X在传递给fit_generator后会被自动转化成形状为(1,1,1)的张量,则模型的输入层就必须是(None, 1, 1),其中模型输入层的形状约定为(batch的大小, input_shape[0], input_shape[1]),即第一层中的input_shape参数应被设为(1, 1)。generator输出的类别标签Y也是形状为(1,1,1)的张量,因此Batch的大小应为1,输出层的形状应被设计为(None, 1, 1)。注意:输入输出层形状的第一个参数是Batch的大小,与真实数据维数和形状无关,输入层的input_shape参数只是设定了输入数据形状,构成了输入层形状的后几个参数。

为了避免上述麻烦,建议在数据输入到网络之前进行reshape以适合网络的输入输出形状。对上例:

X=[1,2,3]

Y=[1,2,3]

def generator(X,Y):

while True:

for i in range(len(Y)):

yield np.array(X[i]).reshape(input_layer_shape),

np.array([Y[i]).reshape(output_layer_shape)

model.fit_generator(generator(X,Y), steps_per_epoch=1, epochs=10, verbose=1)AttributeError: 'Model' object has no attribute 'total_loss'出现这种情况一般是模型定义和编译的代码运行时出了问题,模型没有正确生成,而你忘记处理了,导致生成了一半的模型出现缺什么东西的问题。

你的内存满了,试着减小模型的大小,或者换个大点的内存吧。

ResourceExhaustedError: OOM when allocating tensor with shape[1,64,1080,1920] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

[[{{node concat_3}} = ConcatV2[N=2, T=DT_FLOAT, Tidx=DT_INT32, _device="/job:localhost/replica:0/task:0/device:GPU:0"](conv2d_transpose_3, g_conv1_2/Maximum, concat-2-LayoutOptimizer)]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.

[[{{node DepthToSpace/_3}} = _Recv[client_terminated=false, recv_device="/job:localhost/replica:0/task:0/device:CPU:0", send_device="/job:localhost/replica:0/task:0/device:GPU:0",

send_device_incarnation=1, tensor_name="edge_277_DepthToSpace", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:CPU:0"]()]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.换个显存更大的机器就解决了

如果有两个或以上python进程都在使用gpu,有时会报出复制错误等运行时错误,这时关掉其余的进程或kernel即可。

Loss函数由于形式复杂,常常会出现NaN的现象,对于这种bug,可以使用以下语句逐个tensor进行分析:

tf.reduce_any(tf.math.is_nan(value)).eval(session=tf.Session())常常引起NaN的函数有:tf.math.divide,tf.math.log,tf.math.sqrt,对于除以0的NaN可以使用如下函数保证分母不为0:

def safe_divisor(x):

return tf.where(tf.logical_and(tf.less(x,1e-6),tf.greater_equal(x,0)), 1e-6 * tf.ones_like(x), x)对于log和sqrt,可以使用以下函数保证大于0:

def safe_positive(x):

return tf.math.abs(x)+0.001

def normalize(image):

i_max = tf.math.reduce_max(image)

i_min = tf.math.reduce_min(image)

if i_max == i_min:

return image

return tf.math.divide(image - i_min, i_max - i_min)此外,学习率过大也会引起loss变为NaN,但是通常随机初始化权重后,还没有反向传播时loss并不是NaN

ValueError: Cannot convert a partially known TensorShape to a Tensor: xxx

此类语句会引起这种错误:x,y,z = tensor.shape,因为tensor.shape还是tensor类型,不能转成元组

TypeError: Cannot create initializer for non-floating point type.

Placeholder的数据类型和网络输入类型不匹配会导致此错误

在所有Placeholder,变量,optimizer之后添加sess.run(tf.global_variables_initializer())即可解决

ValueError: Cannot convert an unknown Dimension to a Tensor: ?

将tensor.shape[0]改成tf.shape(tensor)[0]

展开阅读

RuntimeError: Input type (torch.cuda.FloatTensor) and weight type (torch.FloatTensor) should be the same

在初始化后的model后添加代码model.cuda()即可

RuntimeError: cuda runtime error (59) : device-side assert triggered at xxx

使用如下flag重新运行python脚本:

CUDA_LAUNCH_BLOCKING=1 python your_script.py并根据assertion fail信息进行debug

a if condition else b + c if condition else d == a if condition else (b + c if condition else d)因此一定要在if else表达式外面加括号

Key error 是因为字典的key找不到引起的

pytorch默认复制索引,因此用一个tensor记录历史值不能直接用等号,要用clone()

a=torch.zeros((2,3))

a_old = a[1,1] # 0

a[1,1] = 1

print(a_old) # 1展开阅读

from sympy.ntheory.primetest import isprimedef hex_str2int(s):

prime="".join(s.replace(" ","").replace("\n","").split(":"))

return int(prime, 16)# 依赖 matchpy, sympy.__version__>='1.5'

from sympy import symbols

from sympy import pprint

from sympy.integrals.rubi.rubimain import LoadRubiReplacer

from sympy.integrals.rubi.rubimain import rubi_integrate

x, y, z, b, d, m, n = symbols('x y z b d m n', real=True, imaginary=False)

loader = LoadRubiReplacer()

loader.from_pickle("rubi.pickle")

pprint(rubi_integrate((b*x)**m*(d*x + 2)**n, x))

loader.to_pickle("rubi.pickle")import numpy as np

def cardinal_mul(x,y):

x = np.array(x).reshape(-1)

y = np.array(y).reshape(-1)

return np.array(np.meshgrid(x,y)).T.reshape(-1,2)import base64

def int2ascii(n):

return base64.b64encode(n.to_bytes(n.bit_length()//8+1,byteorder='big')).decode('ascii')

def ascii2int(s):

return int.from_bytes(base64.b64decode(s.encode('ascii')),byteorder='big')def filter_linebreak(s):

return s.translate(str.maketrans({'\n':None}))def rbf(sigma=1):

def rbf_kernel(x1,x2,sigma):

m=len(x1)

n=len(x2)

d=x1.shape[1]

x1 = x1.reshape((m,1,d))

x2 = x2.reshape((1,n,d))

result = np.sum((x1-x2)**2,2)

result = np.exp(-result/(2*sigma**2))

return result

return lambda x1,x2: rbf_kernel(x1,x2,sigma)

def poly(n=3):

return lambda x1,x2: (x1 @ x2.T)**nimport sparse

s=sparse.zeros((m,n))

nparr = s.todense()

s=sparse.COO(nparr)import sys

def getGB(a):

print(sys.getsizeof(a)/1e9,"GB")

returna = dict() # 空字典

b = {1:1, 2:2}

c = {3:3}

d = {**b, **c} # 字典的并集

d.pop(k) # 删除key为k的项a = frozenset([1,2])

b = frozenset([1,2,3])

a & b # 交

a | b # 并

a < b # a 是 b 的真子集

a <= b # a 是 b 的子集

a ^ b # 对称差

a - b # 差集

i in a # i 属于 a

a=frozenset([1,2,3])

b=frozenset([2,5])

c=frozenset([a,b]) # 嵌套的集合 frozenset{frozenset{}}g[n1][n2]['label'] # 获取边 n1-n2 的标签

g.nodes[n]['label'] # 获取节点 n 的标签

g.add_node(n, label=newlabel) # 更改节点 n 的标签

g.add_edge(e, label=newlabel) # 更改边 e 的标签

g=nx.Graph([e1,e2,e3]) # 从边新建图

g.edges(nodes) # 所有与 nodes 邻接的边

g.subgraph(nodes) # 从 nodes 诱导的子图

g.edge_subgraph(edges) # 从 edges 诱导的子图

frozenset(a.edge_subgraph(a.edges([1,2]))) # 节点1和2的邻接子图

nx.draw(G) # 画图

pos = nx.spring_layout(base) # 设定位置

nx.draw_networkx_nodes(base,pos=pos,node_color='#000000') # 节点画成黑色

nx.draw_networkx_edges(base,pos=pos,edge_color='#000000') # 边画成黑色graph=nx.Graph([[0,1],[1,2],[1,3],[3,4],[2,4],[4,5],[1,4]])

subgraph=nx.Graph([[0,1],[0,2]])

gm = isomorphism.GraphMatcher(graph,subgraph)

gm.subgraph_isomorphisms_iter() # 从 graph 的一个诱导子图到 subgraph 的 embedding 字典迭代器,其中subgraph是graph的诱导子图,否则迭代器为空

gm.subgraph_monomorphisms_iter() # 和iso的区别是它的条件更宽松

# 测试mono和iso,mono有

len(list(gm.subgraph_monomorphisms_iter())) # == 28

len(list(gm.subgraph_isomorphisms_iter())) # == 16import os

filenames = list(os.listdir("dir"))

os.rename(filename1,filename2)fig,ax = plt.subplots()

ax.errorbar(x, y, err, ecolor='r', fmt='--o',capsize =5)

ax.set_title('title')

ax.set_xlabel('x')

ax.set_ylabel('x')

fig.savefig(filename)

fig.show()

fig.clf()import matplotlib.pyplot as plt

import scipy.stats

import numpy as np

def mean_confidence_interval(data, confidence=0.95):

a = 1.0 * np.array(data)

n = len(a)

m, se = np.mean(a), scipy.stats.sem(a)

h = se * scipy.stats.t.ppf((1 + confidence) / 2., n-1)

return m, hfor i in range(len(s)):

for j in range(i+1, len(s)):

if s[i] == s[j]:

answer = (i, j)

break

else:

continue

breakdef perm(a,i,r):

if i < len(a):

for j in range(len(a[i])):

r[i]=a[i][j]

yield from perm(a,i+1,r) # for i in perm(a,i+1,r): yield i

else:

yield r

a=[[1,2],[3,4,5],[6,7],[8]]

for i in perm(a, 0, [0,0,0,0]):

print(i)f = {1:3,2:4}将集合A={1,2}映射到B={3,4}B=set(map(lambda x:dic[x],A))a=np.zeros((2,3), dtype='complex_')

a[1,2] = 1j展开阅读

import cv2

import numpy as np

from skimage.transform import resize

def resize_scaling(img, ratio):

return resize(img, (int(img.shape[0]*ratio),int(img.shape[1]*ratio)))

def draw_bbox(img_path,label_path,result_path):

# 读取图片(img_path)和标签(label_path)并绘制bbox,将结果写入到result_path

img=cv2.imread(img_path)

with open(label_path,"r") as f:

label_string=f.read()

label = [[int(j) for j in filter(lambda x: x !="",i.split(" "))] for i in label_string.split("\n")][1:-1]

for bbox in label:

#bbox=x12y12_to_xywh(i[0],i[1],i[2],i[3])

img=cv2.rectangle(img, (bbox[0],bbox[1]), (bbox[2],bbox[3]), (0,255,0), 4)

cv2.imwrite(result_path,img)

def x12y12_to_xywh(x1,y1,x2,y2):

# bbox格式转换

x,y=x1,y1

w=x2-x1

h=y2-y1

return x,y,w,h

def white_balance(img):

# 白平衡

result = cv2.cvtColor(img, cv2.COLOR_BGR2LAB)

avg_a = np.average(result[:, :, 1])

avg_b = np.average(result[:, :, 2])

result[:, :, 1] = result[:, :, 1] - ((avg_a - 128) * (result[:, :, 0] / 255.0) * 1.1)

result[:, :, 2] = result[:, :, 2] - ((avg_b - 128) * (result[:, :, 0] / 255.0) * 1.1)

result = cv2.cvtColor(result, cv2.COLOR_LAB2BGR)

return result

def histeq(img):

# 直方图均衡化

img_yuv = cv2.cvtColor(img, cv2.COLOR_BGR2YUV)

# equalize the histogram of the Y channel

img_yuv[:,:,0] = cv2.equalizeHist(img_yuv[:,:,0])

# convert the YUV image back to RGB format

img_output = cv2.cvtColor(img_yuv, cv2.COLOR_YUV2BGR)

return img_output

def histogram_equalize(img):

# 直方图均衡化

b, g, r = cv2.split(img)

red = cv2.equalizeHist(r)

green = cv2.equalizeHist(g)

blue = cv2.equalizeHist(b)

return cv2.merge((blue, green, red))

def adjust_gamma(image, gamma=1.0):

# 伽玛校正

invGamma = 1.0 / gamma

table = np.array([((i / 255.0) ** invGamma) * 255

for i in np.arange(0, 256)]).astype("uint8")

return cv2.LUT(image, table)

def flood_fill(img, xsize, ysize, x_start, y_start, color, cond):

# 满足cond条件时就一直用color洪水填充,起始位置(x_start, y_start),输入img为形状为(xsize, ysize, 3)的numpy array

# 填充集s内的像素会被填充

s = { (x_start, y_start) }

# 完成集filled内的像素不会再次填充

filled = set()

while s:

(x, y) = s.pop()

# 如果满足填充条件cond且未被填充过,则填充并将周围像素加入填充集s

if cond(img[x,y]) and (x, y) not in filled:

# 填充并加入完成集filled

img[x,y] = color

filled.add((x, y))

# 将周围像素加入填充集s

if x > 0:

s.add((x-1, y))

if x < xsize - 1:

s.add((x+1, y))

if y > 0:

s.add((x, y-1))

if y < ysize - 1:

s.add((x, y+1))

展开阅读

在keras中,使用model.add()进行LSTM层结构初始化时,如果设定inputshape=(None,输入向量序列中向量的维度),即可实现向量序列的不定长度,即#time step可变,不用再对每个序列填充0向量,使所有序列长度相同了。

示例

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

import numpy as np

model = Sequential()

model.add(LSTM(32, return_sequences=True, input_shape=(None, 2)))

model.add(LSTM(8, return_sequences=True))

model.add(Dense(1, activation='sigmoid'))

print(model.summary(90))

model.compile(loss='mean_squared_error',

optimizer='adam')

X1=np.array([[[0.25908799, 0.96578602],

[0.22886421, 0.16556086],

[0.82094901, 0.69984487],

[0.97888577, 0.76304284],

[0.28470417, 0.11232793],

[0.23395936, 0.14732181]]])

Y1=np.array([[[1]]])

X2=np.array([[[0.41400308, 0.48925297],

[0.99921471, 0.6069814 ],

[0.61261462, 0.7192767 ]]])

Y2=np.array([[[0.5]]])

model.fit(X1, Y1, steps_per_epoch=2, epochs=10, verbose=1)但是,我个人不推荐这样做,因为如果这样使用变长的输入数据,在使用model.predict_generator()进行预测时,会遇到如下Bug。

ValueError Traceback (most recent call last)

<ipython-input-17-11e3da5819e6> in <module>()

----> 1 model.predict_generator(generatorX(X,wv_model),steps=10, verbose=1)

E:\Anaconda3\lib\site-packages\tensorflow\python\keras\engine\training.py in predict_generator(self, generator, steps, max_queue_size, workers, use_multiprocessing, verbose)

2296 workers=workers,

2297 use_multiprocessing=use_multiprocessing,

-> 2298 verbose=verbose)

2299

2300 def _get_callback_model(self):

E:\Anaconda3\lib\site-packages\tensorflow\python\keras\engine\training_generator.py in predict_generator(model, generator, steps, max_queue_size, workers, use_multiprocessing, verbose)

435 return all_outs[0][0]

436 else:

--> 437 return np.concatenate(all_outs[0])

438 if steps_done == 1:

439 return [out[0] for out in all_outs]

ValueError: all the input array dimensions except for the concatenation axis must match exactly这个Bug可以在github上找到,但是并没有在旧版本中修复。

如果要对英文文本进行处理,还要在word embedding之前进行stemming和lemmatization以便减少词典大小。比如将复数名词和动词comes, horses转化为词干come, hors。

示例

import nltk

ps=nltk.stem.PorterStemmer()

print(ps.stem("comes")) #come

print(ps.stem("horses")) #hors如果只想把复数形式转化为单数形式,可以安装Pattern3库,使用如下命令安装

pip install Pattern3如果在安装后使用时遇到如下错误:

File "/home/xxx/anaconda3/lib/python3.7/site-packages/pattern3/text/tree.py", line 37

except:

^

IndentationError: expected an indented block则编辑/home/xxx/anaconda3/lib/python3.7/site-packages/pattern3/text/tree.py这个文件,将

34行的:

from itertools import chain

try:

except:

izip = zip # Python 3改为:

try:

from itertools import chain

except:

izip = zip # Python 3如果国内源速度慢,可以在~/.pip/ 下新建文件pip.conf,输入内容:

[global]

index-url = https://mirrors.aliyun.com/pypi/simple/示例

from pattern3.en import singularize

print(singularize("comes"))

print(singularize("horses"))更好的方案是使用NLTK进行lemmatize,它不仅可以改变单复数,还可以改变时态

from nltk.stem.wordnet import WordNetLemmatizer

words = ['gave','went','going','dating','comes','horses']

for word in words:

print(word+"-->"+WordNetLemmatizer().lemmatize(word,'v'))

#gave-->give

#went-->go

#going-->go

#dating-->date

#comes-->come

#horses-->horse在使用预训练好的word2vec模型时,常常会遇到极其heavy的模型,如GoogleNews-vectors-negative300.bin,在使用gensim.models.KeyedVectors.load_word2vec_format()加载入内存后使用了将近4个G的内存,在训练时很容易导致MemoryError。然而我们使用的语料库的词汇很可能没有这么多,这个模型中大部分的词汇我们可能都没有用到,因此有必要对模型进行精简,以下代码实现模型精简。

import gensim

import nltk

import csv

import numpy as np

tokenizer=nltk.tokenize.RegexpTokenizer(r'\w+')

def find_minimal_vocab(X):

vocab = set()

for Xi in X:

for j in range(2):

vocab = vocab.union(set(tokenizer.tokenize(Xi[j])))

return vocab

def restrict_w2v(w2v, restricted_word_set):

# minify the word vector model

#w2v.init_sims()

new_vectors = []

new_vocab = {}

new_index2entity = []

new_vectors_norm = []

for i in range(len(w2v.vocab)):

word = w2v.index2entity[i]

vec = w2v.vectors[i]

vocab = w2v.vocab[word]

#vec_norm = w2v.vectors_norm[i]

if word in restricted_word_set:

vocab.index = len(new_index2entity)

new_index2entity.append(word)

new_vocab[word] = vocab

new_vectors.append(vec)

#new_vectors_norm.append(vec_norm)

w2v.vocab = new_vocab

w2v.vectors = np.array(new_vectors)

w2v.index2entity = new_index2entity

w2v.index2word = new_index2entity

#w2v.vectors_norm = new_vectors_norm

wv_model = gensim.models.KeyedVectors.load_word2vec_format(input("你要精简的模型文件路径及文件名: ", binary=True)

M=[]

with open(input("你的训练集路径及文件名:"), newline='\n',encoding='utf8') as csvfile:

reader = csv.reader(csvfile, delimiter='\t', quotechar='\t')

for row in reader:

M.append(row)

X=[i[5:7] for i in M]

Y=[i[4] for i in M]

del M

Md=[]

with open(input("你的开发集路径及文件名:"), newline='\n',encoding='utf8') as csvfile:

reader = csv.reader(csvfile, delimiter='\t', quotechar='\t')

for row in reader:

Md.append(row)

Xd=[i[5:7] for i in Md]

Yd=[i[4] for i in Md]

del Md

Mt=[]

with open(input("你的测试集路径及文件名:"), newline='\n',encoding='utf8') as csvfile:

reader = csv.reader(csvfile, delimiter='\t', quotechar='\t')

for row in reader:

Mt.append(row)

Xt=[i[5:7] for i in Mt]

Yt=[i[4] for i in Mt]

del Mt

v1 = find_minimal_vocab(X)

v2 = find_minimal_vocab(Xd)

v3 = find_minimal_vocab(Xt)

vocab = v1.union(v2,v3)

del v1

del v2

del v3

restrict_w2v(wv_model,vocab)

wv_model.save_word2vec_format(input("精简后的模型文件输出路径及文件名:"), binary=True)展开阅读

N:打开音符输入

[A-G]:在谱的相应位置输入音符

Ctrl+上方向键:将蓝色的刚输入的音符上移八度

Ctrl+下方向键:将蓝色的刚输入的音符下移八度

W:将蓝色的刚输入的音符节拍延长一半

Q:将蓝色的刚输入的音符节拍缩短一半

Shift+W:将蓝色的刚输入的音符延长一个附点

Shift+Q:将蓝色的刚输入的音符缩短一个附点

数字键1-6:直接设定待输入音符的节拍

Shift+[A-G]:在蓝色的刚输入的音符基础上输入其他和弦音

展开阅读

如何为lua写一个C library(如何在Lua中调用C代码/C动态库)?

.\install.bat /LUA "C:\lua" /INC "C:\lua\include" /LIB "C:\lua\lib" /BIN "C:\lua\bin" /MW /P "C:\luarocks"#include <math.h>

#include "lua.h"

#include "lauxlib.h"

#include "lualib.h"

//my C function to be registered to Lua

static int l_sin (lua_State *L) {

double d = lua_tonumber(L, 1); /* get argument */

lua_pushnumber(L, sin(d)); /* push result */

return 1; /* number of results */

}

static const struct luaL_Reg mylib[]={{"mysin", l_sin},{NULL,NULL}};

int luaopen_mylib(lua_State *L) {

luaL_newlib(L, mylib);

return 1;

}回到上级目录,打开文件mylib-dev-1.rockspec,输入以下内容:

package = "mylib"

version = "dev-1"

source = {

url = ""

}

description = {

homepage = "*** please enter a project homepage ***",

license = "*** please specify a license ***"

}

dependencies = {

"lua >= 5.1, < 5.4"

-- If you depend on other rocks, add them here

}

build = {

type = "builtin",

modules = {

mylib = {"src/mylib.c"}

}

}在mylib目录下运行命令luarocks make编译此C library,打开lua解释器输入m=require("mylib")导入自定义的C library

如果不想安装luarocks,也可以使用以下命令直接编译生成mylib.dll

gcc -O2 -c -o src/mylib.o -IC:\lua\include src/mylib.c

gcc -shared -o mylib.dll src/mylib.o C:\lua\lib/lua53.dll -lm 随后在环境变量LUA_CPATH中添加.\?.dll即可使用require("mylib")在当前目录下使用编译好的C library了(注意:不要在LUA_PATH中添加.?.dll,否则?.dll文件会被当成lua脚本打开,从而报语法错误)

如何像python中的dir(a)函数一样打印变量a的所有方法?

for k in pairs(getmetatable(a).__index) do print(k) end如何模仿C语言中的a?b:c表达式?

a and b or c如何设定对象a的方法为b?

setmetatable(a,{__index=b})如何设置x的缺省值为v?

x = x or v如何将x近似成精度为两位小数的数?

x = x - x%0.01如何将x近似到最近的整数?

x = math.floor(x + 0.5)如何将x转为浮点数?

x = x + 0.0如何将x强转为整数?

x = x | 0如何将字符串s转成保存每个字符数值的数组?

array = {string.byte(s, 1, -1)}如何按字符位置(如第5个字符)索引字符串的字符数值?

value = utf8.codepoint(s, utf8.offset(s, 5)) 如何像python一样在数组a后append?

a[#a + 1] = v

table.insert(a, v)如何将数组a从i到j的元素整体平移至i+d到j+d?

table.move(a, i, j, i+d)如何将函数nonils的参数打包成一个数组arg?

function nonils (...)

local arg = table.pack(...)

for i = 1, arg.n do

if arg[i] == nil then return false end

end

return true

end如何将数组a解包并用作函数f的输入参数?

f(table.unpack(a))如何匹配空格分隔的字符串子串?

string.match(s, "(%S+)%s+(%S+)")如何像python的inputstr.split(sep)一样将字符串s分割?

function mysplit (inputstr, sep)

if sep == nil then

sep = "%s"

end

local t={}

for str in string.gmatch(inputstr, "([^"..sep.."]+)") do

table.insert(t, str)

end

return t

end如何实现链表?

list = {next = list, value = v} -- 头插如何实现集合set?

set[element] = true如何实现包bag?

bag[element] = (bag[element] or 0) + 1如何像python的s="\n".join(t)一样连接子串列表t构成字符串s?

t[#t + 1] = ""

s = table.concat(t, "\n")如何只使用C动态库而不是同名lua脚本?

package.path = "" -- 清空lua脚本的搜索路径

local amoeba = require "amoeba"展开阅读

使用包管理器cabal安装Happy

# 编译安装

cabal get happy && cd happy* && cabal configure && cabal install

# 直接从Hackage安装,--ghc-options="+RTS -M200M" -j1的flag

# 是为了防止并行编译使用过多内存导致内存耗尽,如果内存很大

# 可以去掉。安装Haskell库时要加-lib,用cabal安装二进制程序

# 时不需要加-lib的参数

cabal install happy --ghc-options="+RTS -M200M" -j1如何在GHCi查看包内的所有函数的签名?

import Data.List

:browse Data.Listimport as ?

import qualified Data.Map as Map 如何查看类型,类别(Kinds: types of types),帮助信息?

:t []

:kind Monad

:i Monad实现两参数函数f的Partial application

elem :: Eq a => a -> t a -> Bool

-- 判断数字是否在列表[1..4]中

isInList :: Int -> Bool

isInList = (`elem` [1..4])

-- 判断4是否在列表中

hasElem :: [Int] -> Bool

hasElem = elem 4实现Bool类型的异或

{-# LANGUAGE FlexibleInstances #-}

class CanXOR a where

xor :: a -> a -> a

instance CanXOR [Bool] where

xor a b = map (\(x,y)->x /= y) (zip a b)

instance CanXOR Bool where

xor a b = a/=b列表推导

import Data.List

[(x, y, a) | x <- [1 .. 10], y <- [2..5], even (x+y), let a = 3]

perms [] = [[]]

perms xs = [ x:ps | x <- xs , ps <- perms ( xs\\[x] ) ]从标准输入中读取整数数组并输出

main = do

s <- getLine

let nums = map (\x -> read x::Int) $ words s

print nums使用lambda代数实现where

sumSquareOrSquareSum x y = if sumSquare > squareSum

then sumSquare

else squareSum

where sumSquare = x^2 + y^2

squareSum = (x+y)^2

-- 两个函数等价

sumSquareOrSquareSum x y = (\sumSquare squareSum ->

if sumSquare > squareSum

then sumSquare

else squareSum) (x^2 + y^2) ((x+y)^2)使用lambda代数实现let

overwrite x = let x = 2

in

let x = 3

in

let x = 4

in

x

-- 两个函数等价

overwrite x = (\x ->

(\x ->

(\x -> x) 4

)3

)2使用lambda代数实现变量覆盖(覆盖x)

-- lambda表达式(\x->content)中content里的x会被覆盖

add4 x = (\x->(\x->(\x->x+1) x +1) x +1) xGCD

gcd a 0 = a

gcd a b = gcd b (mod a b)实现take

myTake 0 x = []

myTake n [] = []

myTake n (x:xs) = x:(myTake (n-1) xs)实现drop

myDrop 0 (x:xs) = xs

myDrop n [] = []

myDrop n (x:xs) = x:(myDrop (n-1) xs)实现reverse

myReverse [] = []

myReverse (x:xs) = (myReverse xs) ++ [x]两种方法实现Fibonacci数列

--调用栈过深的算法(很慢)

fib 0 = 0

fib 1 = 1

fib n = fib (n-1) + fib (n-2)

--更快的算法

fastFib n1 n2 1 = n2

fastFib n1 n2 counter = fastFib n2 (n1+n2) (counter-1)实现和filter相反的remove

remove test x = filter (\e->(not (test e))) x判断是否是回文

isPalindrome x = y == (reverse y) where y= filter (\e->e/=' ') (map toLower x)

-- isPalindrome "A man a plan a canal Panama" -> True计算调和级数

harmonic n= foldl (\x y->x+1/y) 0 [1..n]实现对象和类

-- obj类保存一个数字

obj num = \get -> get num

-- 使用getNum方法获取对象保存的数字

getNum ins = ins id

-- subtractByN方法会改变对象的状态

subtractByN ins n = obj ( (getNum ins) - n)

-- 使用isNeg方法检查数据是否小于0

isNeg ins = (getNum ins) < 0使用Record Syntax 创建新的Robot类型

data Robot = Robot {

name :: Name,

attack :: Attack,

hp :: HP } 更新名为r的Record中的属性值

setAttack r newAttack = r { attack=newAttack}实现非Enum类型类的succ函数

cycleSucc :: (Bounded a, Enum a, Eq a) => a -> a

cycleSucc n = if n == maxBound then minBound else succ n实现骰子类型

data SixSidedDie = S1 | S2 | S3 | S4 | S5 | S6 deriving (Eq,Ord, Enum )

instance Show SixSidedDie where

show S1 = "one"

show S2 = "two"

show S3 = "three"

show S4 = "four"

show S5 = "five"

show S6 = "six"按元组第2个元素排序

data Name = Name (String, String) deriving (Show, Eq)

instance Ord Name where

compare (Name (f1,l1)) (Name (f2,l2)) = compare (l1,f1) (l2,f2)使用Data.Semigroup和guard实现颜色加法幺半群

data Color = Red |

Yellow |

Blue |

Green |

Purple |

Orange |

Brown |

Clear deriving (Show,Eq)

instance Semigroup Color where

(<>) Clear any = any

(<>) any Clear = any

(<>) a b | a == b = a

| all (`elem` [Red,Blue,Purple]) [a,b] = Purple

| all (`elem` [Blue,Yellow,Green]) [a,b] = Green

| all (`elem` [Red,Yellow,Orange]) [a,b] = Orange

| otherwise = Brown

instance Monoid Color where

mempty = Clear

mappend = (<>)从标准输入读取3行并输出

main :: IO ()

main = do

vals <- mapM (\_ -> getLine) [1..3]

mapM_ putStrLn vals从命令行参数接收行数n,从标准输入接收n行数字并求和

-- Filename: sum.hs --

import System.Environment

import Control.Monad

main :: IO ()

main = do

args <- getArgs

let linesToRead = if length args > 0

then read (head args)

else 0 :: Int

numbers <- replicateM linesToRead getLine

let ints = map read numbers :: [Int]

print (sum ints)

-- 使用惰性求值可以更好地处理

-- Filename: sum_lazy.hs

toInts :: String -> [Int]

toInts = map read . lines

main :: IO ()

main = do

userInput <- getContents

let numbers = toInts userInput

print (sum numbers)Functor,Applicative,和Monad图示

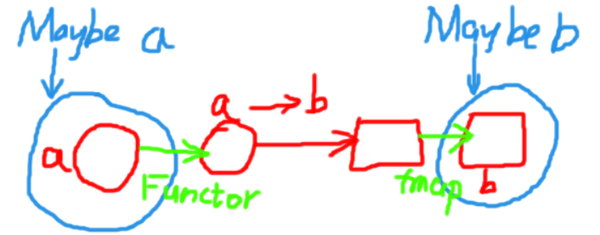

Functor

Functor类型类中最重要的函数是fmap,类型签名是fmap :: (a -> b) -> f a -> f b,如果把f换成Maybe,那么正是上图表示的功能,它可以接受一个a->b的函数和一个在Maybe上下文中的a类型,输出在Maybe上下文中的b类型。

例子:

fmap (+1) (Just 1) -- Output: Just 2

(+1) <$> (Just 1) -- Output: Just 2

fmap (+1) Nothing -- Output: Nothing

(+1) <$> Nothing -- Output: Nothing

show <$> (Just 1) -- Output: Just "1"

Applicative

Applicative类型类中最重要的函数是pure :: a -> f a和(<*>) :: f (a -> b) -> f a -> f b。

pure可以通过将函数Maybe a -> b和pure:: b -> Maybe b复合,得到Maybe a->Maybe b的函数。

<*>可以接受在Maybe上下文的a和在Maybe上下文中的a->b类型函数(函数可能不存在),输出Maybe上下文中的b。

例子:

sum3 :: Int -> Int -> Int -> Int

sum3 x y z = x+y+z

-- 这一步只能用fmap(<$>)

sumFunctor :: Maybe (Int -> Int -> Int)

sumFunctor = sum3 <$> (Just 1)

-- 这一步只能用(<*>)

sumApplicative :: Maybe (Int -> Int)

sumApplicative = sumFunctor <*> (Just 2)

-- 总结一下:在Maybe context下对多个参数的pure函数传参可以简单地写成:

sum3 <$> (Just 1) <*> (Just 2) <*> (Just 3)

-- 等价的写法

pure sum3 <*> (Just 1) <*> (Just 2) <*> (Just 3)

-- 列表也是一个context

-- 两个列表中元素两两相加

pure (+) <*> [1..4] <*> [4..7]

-- 笛卡尔积

pure (*) <*> [1..4] <*> [4..7]

-- 构建2元组

pure (,) <*> [1..4] <*> [4..7]

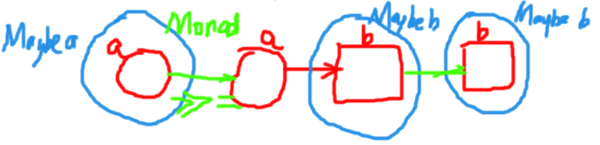

Monad

Monad类型类中最重要的函数是(>>=) :: m a -> (a -> m b) -> m b。此类型签名与上图几乎完全一样,m对应Maybe。