强化学习调参经验

展开阅读

展开阅读

指数加权平均(polyak averaging):$\tau$是加权平均系数,它相当于一个超学习率,非常敏感,增大0.01就有可能会使训练发散。一般要保证$(1-\tau)^{n}$大约在10%左右,n是一个epoch的episodes数。总之要让Actor的Loss在100个epoch内爆炸到$(1-\gamma^T)/(1-\gamma)$的大小,其中$\gamma$是discount,T是一个episode最大时间步,Critic Loss也会跟着爆炸,之后它们会慢慢减小

Replay Buffer的大小可以小一点,几百个episodes就够了,这样可以防止记住错的reward

gamma的大小可以看着每个episode step的Q值来调,如果在出现reward前后变化得过快,则应调大gamma

batchsize应根据Critic Loss的不稳定性调,如果Critic Loss波动过于剧烈,则应调大batchsize,但不应引起训练速度过慢

展开阅读

最优化问题:

$$ \begin{align*} &\max_a & & \sum_{i=1}^m a_i - \frac{1}{2} \sum_{i=1}^m\sum_{j=1}^m a_i a_j y_i y_j x_i^\top x_j \label{softsvmd}\tag{$\mathrm{SVM_{soft} dual}$}\\ &s.t. \ & & \sum_{i=1}^m a_i y_i=0 \\ & & & 0\leq a_i \leq C,\ i=1,\cdots,m \tag{Box Constraint} \end{align*} $$

求解代码:

import numpy as np

import cvxpy as cp

x = np.array([[0,0],[1,0],[0,-1],[-1,1],[-2,1],[-1,2]]) # training samples

y = np.array([[-1],[-1],[-1],[1],[1],[1]]) # training labels

m = len(y) # # of samples

d = x.shape[1] # dim of samples

a = cp.Variable((m,1)) # lagrange multiplier

C = 1 # trade-off parameter

G = np.matmul(y*x, (y*x).T) # Gram matrix

objective = cp.Maximize(cp.sum(a)-(1/2)*cp.quad_form(a, G))

constraints = [0 <= a, a <= C, cp.sum(cp.multiply(a,y)) == 0] # box constraint

prob = cp.Problem(objective, constraints)

result = prob.solve()

print(a.value)多类SVM

import numpy as np

import cvxpy as cp

def rbf(sigma=1):

def rbf_kernel(x1,x2,sigma):

X12norm = np.sum(x1**2,1,keepdims=True)-2*x1@x2.T+np.sum(x2**2,1,keepdims=True).T

return np.exp(-X12norm/(2*sigma**2))

return lambda x1,x2: rbf_kernel(x1,x2,sigma)

def poly(n=3):

return lambda x1,x2: (x1 @ x2.T)**n

class svm_model_cvxpy:

def __init__(self, m,n_class):

self.n_svm = n_class * (n_class - 1)//2

self.m = m # number of samples

self.n_class = n_class

# multiplier

self.a = [cp.Variable(shape=(m,1),pos=True) for i in range(self.n_svm)]

# bias

self.b = np.zeros((self.n_svm,1))

# kernel function should input x [n,d] y [m,d] output [n,m]

# Example of kernels: rbf(1.0), poly(3)

self.kernel = rbf(1)

# Binary setting for every SVM,

# Mij says the SVMj should give

# Mij label to sample with class i

self.lookup_matrix=np.zeros((self.n_class, self.n_svm))

# The two classes SVMi concerns,

# lookup_class[i]=[pos, neg]

self.lookup_class=np.zeros((self.n_svm, 2))

k=0

for i in range(n_class-1):

for j in range(i+1,n_class):

self.lookup_class[k, 0]=i

self.lookup_class[k, 1]=j

k += 1

for i in range(n_class):

for j in range(self.n_svm):

if i == self.lookup_class[j,0] or i == self.lookup_class[j,1]:

if self.lookup_class[j, 0]==i:

self.lookup_matrix[i,j]=1.0

else:

self.lookup_matrix[i,j]=-1.0

def fit(self, x, y_multiclass, kernel=rbf(1), C=0.001):

y_multiclass=y_multiclass.reshape(-1)

self.x = x

self.y_multiclass = y_multiclass

self.kernel = kernel

self.C = C

self.y_matrix = np.stack([self.cast(y_multiclass, k) for k in range(self.n_svm)],0)

for k in range(self.n_svm):

print("training ",k,"th SVM in ",self.n_svm)

y = self.y_matrix[k, :].reshape((-1,1))

yx = y*x

G = kernel(yx, yx) # Gram matrix

objective = cp.Maximize(cp.sum(self.a[k])-(1/2)*cp.quad_form(self.a[k], G))

if not objective.is_dcp():

print("Not solvable!")

assert objective.is_dcp()

constraints = [self.a[k] <= C, cp.sum(cp.multiply(self.a[k],y)) == 0] # box constraint

prob = cp.Problem(objective, constraints)

result = prob.solve()

x_pos = x[y[:,0]==1,:]

x_neg = x[y[:,0]==-1,:]

b_min = -np.min(self.wTx(k,x_pos)) if x_pos.shape[0]!=0 else 0

b_max = -np.max(self.wTx(k,x_neg)) if x_neg.shape[0]!=0 else 0

self.b[k,0] = (1/2)*(b_min + b_max)

self.a_matrix = np.stack([i.value.reshape(-1) for i in self.a],0)

def predict(self,xp):

k_predicts = (self.y_matrix * self.a_matrix) @ self.kernel(xp,self.x).T + self.b

result = np.argmax(self.lookup_matrix @ k_predicts,axis=0)

return result

def cast(self, y, k):

# cast the multiclass label of dataset to

# the pos/neg (with 0) where pos/neg are what SVMk concerns

return (y==self.lookup_class[k, 0]).astype(np.float32) - (y==self.lookup_class[k, 1]).astype(np.float32)

def wTx(self,k,xi):

# The prediction of SVMk without bias, w^T @ xi

y = self.y_matrix[k, :].reshape((-1,1))

a = self.a[k].value.reshape(-1,1)

wTx0 = self.kernel(xi, self.x) @ (y * a)

return wTx0

def get_avg_pct_spt_vec(self):

# the average percentage of support vectors,

# test error shouldn't be greater than it if traing converge

return np.sum((0.0<self.a_matrix) & (self.a_matrix<self.C)).astype(np.float32)/(self.n_svm*self.m)展开阅读

import tensorflow as tf

import numpy as np

import operator as op

from functools import reduce

class svm_model:

def __init__(self,n_class, dimension, learning_rate=1e-2, regularization=1):

self.learning_rate=learning_rate

self.n_class=n_class

self.dimension=dimension

self.w=[]

self.b=[]

self.logit=[]

self.logit_tmp=[]

self.loss=[]

#prediction=[]

self.correct_logit=[]

self.lookup_class=dict()

self.w_model=np.random.uniform(0,1,(self.ncr(n_class,2), dimension)).astype(np.float32)

self.b_model=np.random.uniform(0,1,(self.ncr(n_class,2),1)).astype(np.float32)

self.lookup_matrix=np.zeros((n_class, self.ncr(n_class,2)),dtype=np.float32)

self.batch_x=tf.placeholder(tf.float32,shape=(None,dimension),name="batch_x")

self.batch_y=tf.placeholder(tf.float32,shape=(None,1),name="batch_y")

self.w = tf.Variable(tf.random_uniform([self.ncr(n_class,2), self.dimension]))

self.b = tf.Variable(tf.random_uniform([self.ncr(n_class,2),1]))

self.batch_class_size=[]

k=0

for i in range(n_class-1):

for j in range(i+1,n_class):

self.lookup_class[k]=[i,j]

k += 1

for i in range(n_class):

for j in range(self.ncr(n_class,2)):

if i in self.lookup_class[j]:

if self.lookup_class[j][0]==i:

self.lookup_matrix[i,j]=1.0

else:

self.lookup_matrix[i,j]=-1.0

for i in range(self.ncr(n_class,2)):

idx=tf.where(tf.keras.backend.any(tf.equal(self.batch_y,self.lookup_class[i]),1)) # tf.where and tf.gather_nd is equivalent to a[condition is true]

self.logit.append(tf.matmul(tf.reshape(self.w[i,:],(1,dimension)), tf.gather_nd(self.batch_x, idx), transpose_b=True) + self.b[i,:])

self.logit_tmp.append(tf.tanh(self.logit[i]))

self.correct_logit.append(self.zonp(tf.cast(tf.equal(tf.gather_nd(self.batch_y, idx),self.lookup_class[i][0]),tf.float32)))

self.batch_class_size.append(tf.cast(tf.shape(self.correct_logit[i])[0],tf.float32))

self.loss.append(tf.maximum(0.0,1-tf.matmul(self.logit_tmp[i],self.correct_logit[i])/self.batch_class_size[i]) + regularization * tf.norm(self.w[i,:], 2))

self.prediction = tf.argmax(tf.matmul(self.lookup_matrix, tf.tanh(tf.matmul(self.w, self.batch_x, transpose_b=True) + self.b)),axis=0)

self.total_loss = sum(self.loss)

self.opt = tf.train.RMSPropOptimizer(learning_rate).minimize(self.total_loss)

def ncr(self,n, r):

r = min(r, n-r)

numer = reduce(op.mul, range(n, n-r, -1), 1)

denom = reduce(op.mul, range(1, r+1), 1)

return numer // denom

def zonp(self,zero_one):

return 2*zero_one-1

def fit(self,data_x,data_y,iter_time=1000):

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

sess.run([tf.assign(self.w,self.w_model),tf.assign(self.b,self.b_model)])

for _ in range(iter_time):

_,loss_val,w_model,b_model = sess.run([self.opt,self.total_loss,self.w,self.b], feed_dict={self.batch_x:data_x,self.batch_y:data_y})

print(loss_val)

#print(sess.run(self.batch_class_size, feed_dict={self.batch_y:data_y}))

#print(sess.run(self.logit_tmp, feed_dict={self.batch_x:data_x,self.batch_y:data_y}))

self.w_model = w_model

self.b_model = b_model

return w_model,b_model

def predict(self,data_x):

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

sess.run([tf.assign(self.w,self.w_model),tf.assign(self.b,self.b_model)])

result = sess.run(self.prediction,feed_dict={self.batch_x:data_x})

return result.reshape(-1,1)

def increase_dims(data):

_,dim0=data.shape

k=0

lookup_class=dict()

for i in range(dim0-1):

for j in range(i+1,dim0):

lookup_class[k]=[i,j]

k += 1

#print([data]+[data[:,lookup_class[i][0]]*data[:,lookup_class[i][1]] for i in range(dim0*(dim0-1)//2)])

result=np.hstack([data]+[(data[:,lookup_class[i][0]]*data[:,lookup_class[i][1]]).reshape(-1,1) for i in range(dim0*(dim0-1)//2)]+[data[:,i].reshape(-1,1)**2 for i in range(dim0)])

return result/np.max(result)拿到数据后首先将训练数据和标签转置成 $n\times\text{dim}$ 的矩阵形状(n是样本数,dim是特征维数),经过increase_dims()函数处理,将特征升高到2次非线性的 $\begin{pmatrix}n\\2\end{pmatrix}$ 维空间中,接下来使用如下命令进行拟合和识别:

svm = svm_model(number_of_classes,train_x.shape[1],learning_rate,regularization)

svm.fit(train_x,train_y,iterations)

svm.predict(test_x)展开阅读

conda install tensorflow-gpu以上命令安装好tensorflow-gpu后,我遇到了这样的问题:

CUDA driver version is insufficient for CUDA runtime version

经过分析,我发现使用anaconda自动分析依赖安装的cudatoolkit的版本是CUDA 10.0 (10.0.130),而ubuntu装机自带的NVIDIA驱动版本是nvidia-38

使用如下命令查询Nvidia驱动版本:

nvidia-smi使用如下命令查询cudatoolkit, cuda, cudnn版本:

conda list cudatoolkit

cat /usr/local/cuda/version.txt

cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2英伟达官网给出的CUDA和驱动版本对应关系为:

| CUDA Toolkit | Linux x86_64 Driver Version |

| CUDA 10.2 (10.2.89) | >= 440.33 |

| CUDA 10.1 (10.1.105) | >= 418.39 |

| CUDA 10.0 (10.0.130) | >= 410.48 |

| CUDA 9.2 (9.2.88) | >= 396.26 |

| CUDA 9.1 (9.1.85) | >= 390.46 |

| CUDA 9.0 (9.0.76) | >= 384.81 |

| CUDA 8.0 (8.0.61 GA2) | >= 375.26 |

| CUDA 8.0 (8.0.44) | >= 367.48 |

| CUDA 7.5 (7.5.16) | >= 352.31 |

| CUDA 7.0 (7.0.28) | >= 346.46 |

因此,我找到了nvidia-384对应的CUDA版本:CUDA 9.0 (9.0.76)

使用如下命令对CUDA进行降级即可解决问题:

conda install cudatoolkit=9.0 tensorflow-gpu有时,你需要的cudatoolkit在默认channel里可能没有,使用如下命令安装其他channel里的对应版本:

conda install -c numba cudatoolkit=9.1 tensorflow-gpu展开阅读

使用Tensorflow进行计算机视觉研究时,常常会遇到磁盘读写瓶颈,具体表现为CPU和磁盘使用率极高,而GPU使用率很低。对于这种IO瓶颈,可以使用NVIDIA开源的DALI库进行数据读写加速,以下是安装和使用教程。

安装(需要TensorFlow 版本1.7或更高,CUDA9.0或更高)

CUDA9.0:

pip install --extra-index-url https://developer.download.nvidia.com/compute/redist/cuda/9.0 nvidia-dali-tf-pluginCUDA10.0:

pip install --extra-index-url https://developer.download.nvidia.com/compute/redist/cuda/10.0 nvidia-dali-tf-plugin例子:

class SimplePipeline(Pipeline):

def __init__(self, batch_size, num_threads, device_id, img_dir):

super(SimplePipeline, self).__init__(batch_size, num_threads, device_id, seed = 12)

self.input = ops.FileReader(file_root = img_dir)

self.decode = ops.ImageDecoder(device = 'mixed', output_type = types.RGB)

def define_graph(self):

pngs, labels = self.input()

images = self.decode(pngs)

return images

dark_pipe = SimplePipeline(batch_size, 1, 0, dark_img_dir)

gt_pipe = SimplePipeline(batch_size, 1, 0, gt_img_dir)

daliop = dali_tf.DALIIterator()

in_image= daliop(pipeline = dark_pipe, shapes=[[batch_size,400,600,3]],dtypes=[tf.uint8])

in_image=tf.to_float(in_image[0])/255.0

gt_image= daliop(pipeline = gt_pipe, shapes=[[batch_size,400,600,3]],dtypes=[tf.uint8])

gt_image=tf.to_float(gt_image[0])/255.0

with tf.Session() as sess:

in_img,gt_img = sess.run([in_image, gt_image])以上对应文件路径如下

root

-dark_img_dir

-images

-labels

-gt_img_dir

-images

-labels展开阅读

以下是SVM代码:

import torch

import numpy as np

from random import shuffle

from sklearn.utils import shuffle as shuffle_ds

def rbf(sigma=1):

def rbf_kernel(x1,x2,sigma):

X12norm = torch.sum(x1**2,1,keepdims=True)-2*x1@x2.T+torch.sum(x2**2,1,keepdims=True).T

return torch.exp(-X12norm/(2*sigma**2))

return lambda x1,x2: rbf_kernel(x1,x2,sigma)

def poly(n=3):

return lambda x1,x2: (x1 @ x2.T)**n

class svm_model_torch:

def __init__(self, m, n_class, device="cpu"):

self.device = device

self.n_svm = n_class * (n_class - 1)//2

self.m = m # number of samples

self.n_class = n_class

self.blacklist = [set() for i in range(self.n_svm)]

# multiplier

self.a = torch.zeros((self.n_svm,self.m), device=self.device) # SMO works only when a is initialized to 0

# bias

self.b = torch.zeros((self.n_svm,1), device=self.device)

# kernel function should input x [n,d] y [m,d] output [n,m]

# Example of poly kernel: lambda x,y: torch.matmul(x,y.T)**2

self.kernel = lambda x,y: torch.matmul(x,y.T)

# Binary setting for every SVM,

# Mij says the SVMj should give

# Mij label to sample with class i

self.lookup_matrix=torch.zeros((self.n_class, self.n_svm), device=self.device)

# The two classes SVMi concerns,

# lookup_class[i]=[pos, neg]

self.lookup_class=torch.zeros((self.n_svm, 2), device=self.device)

k=0

for i in range(n_class-1):

for j in range(i+1,n_class):

self.lookup_class[k, 0]=i

self.lookup_class[k, 1]=j

k += 1

for i in range(n_class):

for j in range(self.n_svm):

if i == self.lookup_class[j,0] or i == self.lookup_class[j,1]:

if self.lookup_class[j, 0]==i:

self.lookup_matrix[i,j]=1.0

else:

self.lookup_matrix[i,j]=-1.0

def fit(self, x_np, y_multiclass_np, C, iterations=1, kernel=rbf(1)):

x_np, y_multiclass_np = shuffle_ds(x_np,y_multiclass_np)

self.C = C # box constraint

# use SMO algorithm to fit

x = torch.from_numpy(x_np).float() if not torch.is_tensor(x_np) else x_np

x = x.to(self.device)

self.x = x.to(self.device)

y_multiclass = torch.from_numpy(y_multiclass_np).view(-1,1) if not torch.is_tensor(y_multiclass_np) else y_multiclass_np

y_multiclass=y_multiclass.view(-1)

self.y_matrix = torch.stack([self.cast(y_multiclass, k) for k in range(self.n_svm)],0).to(self.device)

self.kernel = kernel

a = self.a

b = self.b

for iteration in range(iterations):

print("Iteration: ",iteration)

for k in range(self.n_svm):

y = self.y_matrix[k, :].view(-1).tolist()

index = [i for i in range(len(y)) if y[i]!=0]

shuffle(index)

traverse = []

if index is not None:

traverse = [i for i in range(0, len(index)-1, 2)]

if len(index)>2:

traverse += [len(index)-2]

for i in traverse:

if str(index[i])+str(index[i+1]) not in self.blacklist[k]:

y1 = y[index[i]]

y2 = y[index[i+1]]

x1 = x[index[i],:].view(1,-1)

x2 = x[index[i+1],:].view(1,-1)

a1_old = a[k,index[i]].clone()

a2_old = a[k,index[i+1]].clone()

if y1 != y2:

H = max(min(self.C, (self.C + a2_old-a1_old).item()),0)

L = min(max(0, (a2_old-a1_old).item()),self.C)

else:

H = max(min(self.C, (a2_old + a1_old).item()),0)

L = min(max(0, (a2_old + a1_old - self.C).item()),self.C)

E1 = self.g_k(k, x1) - y1

E2 = self.g_k(k, x2) - y2

a2_new = torch.clamp(a2_old + y2 * (E1-E2)/self.kernel(x1 - x2,x1 - x2), min=L, max=H)

a[k,index[i+1]] = a2_new

a1_new = a1_old - y1 * y2 * (a2_new - a2_old)

a[k, index[i]] = a1_new

b_old = b[k,0]

K11 = self.kernel(x1,x1)

K12 = self.kernel(x1,x2)

K22 = self.kernel(x2,x2)

b1_new = b_old - E1 + (a1_old-a1_new)*y1*K11+(a2_old-a2_new)*y2*K12

b2_new = b_old - E2 + (a1_old-a1_new)*y1*K12+(a2_old-a2_new)*y2*K22

if (0<a1_new) and (a1_new<self.C):

b[k,0] = b1_new

if (0<a2_new) and (a2_new<self.C):

b[k,0] = b2_new

if ((a1_new == 0) or (a1_new ==self.C)) and ((a2_new == 0) or (a2_new==self.C)) and (L!=H):

b[k,0] = (b1_new + b2_new)/2

if b_old == b[k,0] and a[k,index[i]] == a1_old and a[k,index[i+1]] == a2_old:

self.blacklist[k].add(str(index[i]) + str(index[i+1]))

def predict(self,x_np):

xp = torch.from_numpy(x_np) if not torch.is_tensor(x_np) else x_np

xp = xp.float().to(self.device)

k_predicts = (self.y_matrix.to(self.device) * self.a) @ self.kernel(xp,self.x).T + self.b

result = torch.argmax(self.lookup_matrix @ k_predicts,axis=0)

return result.to("cpu").numpy()

def cast(self, y, k):

# cast the multiclass label of dataset to

# the pos/neg (with 0) where pos/neg are what SVMk concerns

return (y==self.lookup_class[k, 0]).float() - (y==self.lookup_class[k, 1]).float()

def wTx(self,k,xi):

# The prediction of SVMk without bias, w^T @ xi

y = self.y_matrix[k, :].reshape((-1,1))

a = self.a[k,:].view(-1,1)

wTx0 = self.kernel(xi, self.x) @ (y * a)

return wTx0

def g_k(self,k,xi):

# The prediction of SVMk, xi[1,d]

return self.wTx(k,xi) + self.b[k,0].view(1,1)

def get_w(self, k):

y = self.cast(self.y_multiclass, k)

a = self.a[k,:].view(-1,1)

return torch.sum(a*y*self.x,0).view(-1,1)

def get_svms(self):

for k in range(self.n_svm):

sk = 'g' + str(self.lookup_class[k, 0].item()) + str(self.lookup_class[k, 1].item()) + '(x)='

w = self.get_w(k)

for i in range(w.shape[0]):

sk += "{:.3f}".format(w[i,0].item()) + ' x' + "{:d}".format(i) +' + '

sk += "{:.3f}".format(self.b[k,0].item())

print(sk)

def get_avg_pct_spt_vec(self):

# the average percentage of support vectors,

# test error shouldn't be greater than it if traing converge

return torch.sum((0.0<self.a) & (self.a<self.C)).float().item()/(self.n_svm*self.m)以下示例代码使用了此SVM模型:

import numpy as np

#from svm_torch import *

data_x = np.array([[-2,1],[-2,2],[-1,1],[-1,2],[1,1],[1,2],[2,1],[2,2],[1,-1],[1,-2],[2,-1],[2,-2],[-2,-1],[-2,-2],[-1,-1],[-1,-2]])

data_y = np.array([[0],[0],[0],[0],[1],[1],[1],[1],[2],[2],[2],[2],[3],[3],[3],[3]])

m = len(data_x)

c = len(np.unique(data_y))

svm = svm_model_torch(m,1,c)

svm.fit(data_x,data_y,10)

print(svm.predict(data_x)) # 预测结果

svm.get_svms() # Cn2 个SVM分类界面的表达式

print(svm.a) # 拉格朗日乘子最后使用mlxtend绘制分类界面:

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

import numpy as np

colors = ['red','green','blue','purple']

data_x = np.array([[-2,1],[-2,2],[-1,1],[-1,2],

[1,1],[1,2],[2,1],[2,2],

[1,-1],[1,-2],[2,-1],[2,-2],

[-2,-1],[-2,-2],[-1,-1],[-1,-2]])

data_y = np.array([[0],[0],[0],[0],

[1],[1],[1],[1],

[2],[2],[2],[2],

[3],[3],[3],[3]]).reshape(-1)

fig = plt.figure()

fig = plt.scatter(data_x[:,0],data_x[:,1],c=data_y, cmap=ListedColormap(colors))

from mlxtend.plotting import plot_decision_regions

x=np.linspace(-3,3,1000)

test_x = np.array(np.meshgrid(x,x)).T.reshape(-1,2)

test_y = svm.predict(test_x).reshape(-1).numpy()

scatter_kwargs = {'alpha': 0.0}

fig =plot_decision_regions(test_x, test_y, clf=svm,scatter_kwargs=scatter_kwargs)

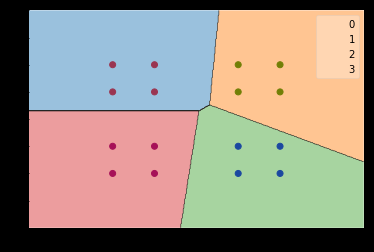

plt.show()绘制结果:

展开阅读

首先,为了方便划分不同的网络模块(子网络)、分别导入权重、指定是否需要训练、指定是否需要复用,需要使用如下语句为网络权重设定scope;使用collection将隐含层输出保存为字典;在with xxx:语句内部定义的网络层也要定义scope

import tensorflow as tf

import tensorflow.contrib.slim as slim

# 需要Reuse时,设定reuse=tf.AUTO_REUSE

with tf.variable_scope("model_name", "model_name", reuse=tf.AUTO_REUSE) as sc:

# 之后就可以在下面定义网络了

conv1 = slim.conv2d(input, 32, [3, 3], rate=1, activation_fn=lrelu, scope='layer_name')

# 还可以使用end_points字典保存隐含层输出

end_points = slim.utils.convert_collection_to_dict("collection_name")

end_points[sc.name + "/layer_name'] = conv1然后,在优化器优化代码处定义需要训练的scope

loss = #定义好你的loss

# 从scope获得需要训练的变量表

train_vars = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, "model_name")

# 将var_list设定为刚才获得的变量表

opt = tf.train.AdamOptimizer(learning_rate=1e-5).minimize(loss, var_list=train_vars)最后,导入预训练的权重

# 导入权重前要进行变量初始化

sess.run(tf.global_variables_initializer())

# 错误?导入预训练的权重(导入后会被初始化覆盖?)

# tf.train.init_from_checkpoint("model_name.ckpt", {"model_name/":"model_name/"})

# 更好的导入权重的方法

saver = tf.train.Saver(tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, "model_name"))

saver_sid.restore(sess, "model_name.ckpt") 展开阅读

使用Tensorflow/Keras中在不同的网络层之间传递的tensor对象的eval()方法进行调试,在一个Tensorflow Session中,使用tf.global_variables_initializer()以初始化之前定义的tensor对象。之后就可以对之前定义的全局变量进行更改,以对网络的输入进行自定义,并使用eval()方法观察层之间的输出值了。

input_test = X[0],Y[0] #从输入数据中截取的测试片段

output_test = model(

[tf.convert_to_tensor(input_test[0][0],float),

tf.convert_to_tensor(input_test[0][1],float)]

) #输入网络,得到最终输出

loss = my_loss(tf.convert_to_tensor(input_test[1],float), output_test)

test_x1=tf.convert_to_tensor([[1,2],[2,3]],float) #对my_layer层的测试输入,第一个参数

test_x2=tf.convert_to_tensor([[2,1],[4,3]],float) #对my_layer层的测试输入,第二个参数

test_simulation = my_layer([test_x1,test_x2]) #计算测试结果

print(test_simulation.shape) #不用启动session也可以输入中间层的形状

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print(test_simulation.eval())

print(input_test[1],output_test.eval())

print(loss.eval())TypeError: Input 'b' of 'MatMul' Op has type float32 that does not match

type float64 of argument 'a'.这种错误是可能是由于输入给模型(或函数)的tensor数据类型不是正常的float类型,可以将错误的tensor输入给函数tf.convert_to_tensor()以得到正常的tensor,其中函数convert_to_tensor的dtype参数应设置为np.float32或float(不怕麻烦的话可以把所有的tensor都设置一遍,肯定能解决这个问题)。如下例所示:

input = tf.convert_to_tensor(input,np.float32)ValueError: An operation has `None` for gradient. Please make sure that all of your

ops have a gradient defined (i.e. are differentiable). Common ops without gradient:

K.argmax, K.round, K.eval.出现这种情况时一般是自己定义的Loss函数出了问题

ValueError: Error when checking target: expected dense to have 3 dimensions,

but got array with shape (1, 1)这种错误一般是由于输入张量数据和类别标签的张量维数(形状/shape)与网络结构的输入层或输出层不匹配导致的。比如在使用model.fit_generator()对训练数据进行拟合时,如果输入给这个函数的generator参数定义为如下形式:

X=[1,2,3]

Y=[1,2,3]

def generator(X,Y):

while True:

for i in range(len(Y)):

yield [[[[X[i]]]],[[[[Y[i]]]]

model.fit_generator(generator(X,Y), steps_per_epoch=1, epochs=10, verbose=1)那么generator输出的训练数据X在传递给fit_generator后会被自动转化成形状为(1,1,1)的张量,则模型的输入层就必须是(None, 1, 1),其中模型输入层的形状约定为(batch的大小, input_shape[0], input_shape[1]),即第一层中的input_shape参数应被设为(1, 1)。generator输出的类别标签Y也是形状为(1,1,1)的张量,因此Batch的大小应为1,输出层的形状应被设计为(None, 1, 1)。注意:输入输出层形状的第一个参数是Batch的大小,与真实数据维数和形状无关,输入层的input_shape参数只是设定了输入数据形状,构成了输入层形状的后几个参数。

为了避免上述麻烦,建议在数据输入到网络之前进行reshape以适合网络的输入输出形状。对上例:

X=[1,2,3]

Y=[1,2,3]

def generator(X,Y):

while True:

for i in range(len(Y)):

yield np.array(X[i]).reshape(input_layer_shape),

np.array([Y[i]).reshape(output_layer_shape)

model.fit_generator(generator(X,Y), steps_per_epoch=1, epochs=10, verbose=1)AttributeError: 'Model' object has no attribute 'total_loss'出现这种情况一般是模型定义和编译的代码运行时出了问题,模型没有正确生成,而你忘记处理了,导致生成了一半的模型出现缺什么东西的问题。

你的内存满了,试着减小模型的大小,或者换个大点的内存吧。

ResourceExhaustedError: OOM when allocating tensor with shape[1,64,1080,1920] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

[[{{node concat_3}} = ConcatV2[N=2, T=DT_FLOAT, Tidx=DT_INT32, _device="/job:localhost/replica:0/task:0/device:GPU:0"](conv2d_transpose_3, g_conv1_2/Maximum, concat-2-LayoutOptimizer)]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.

[[{{node DepthToSpace/_3}} = _Recv[client_terminated=false, recv_device="/job:localhost/replica:0/task:0/device:CPU:0", send_device="/job:localhost/replica:0/task:0/device:GPU:0",

send_device_incarnation=1, tensor_name="edge_277_DepthToSpace", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:CPU:0"]()]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.换个显存更大的机器就解决了

如果有两个或以上python进程都在使用gpu,有时会报出复制错误等运行时错误,这时关掉其余的进程或kernel即可。

Loss函数由于形式复杂,常常会出现NaN的现象,对于这种bug,可以使用以下语句逐个tensor进行分析:

tf.reduce_any(tf.math.is_nan(value)).eval(session=tf.Session())常常引起NaN的函数有:tf.math.divide,tf.math.log,tf.math.sqrt,对于除以0的NaN可以使用如下函数保证分母不为0:

def safe_divisor(x):

return tf.where(tf.logical_and(tf.less(x,1e-6),tf.greater_equal(x,0)), 1e-6 * tf.ones_like(x), x)对于log和sqrt,可以使用以下函数保证大于0:

def safe_positive(x):

return tf.math.abs(x)+0.001

def normalize(image):

i_max = tf.math.reduce_max(image)

i_min = tf.math.reduce_min(image)

if i_max == i_min:

return image

return tf.math.divide(image - i_min, i_max - i_min)此外,学习率过大也会引起loss变为NaN,但是通常随机初始化权重后,还没有反向传播时loss并不是NaN

ValueError: Cannot convert a partially known TensorShape to a Tensor: xxx

此类语句会引起这种错误:x,y,z = tensor.shape,因为tensor.shape还是tensor类型,不能转成元组

TypeError: Cannot create initializer for non-floating point type.

Placeholder的数据类型和网络输入类型不匹配会导致此错误

在所有Placeholder,变量,optimizer之后添加sess.run(tf.global_variables_initializer())即可解决

ValueError: Cannot convert an unknown Dimension to a Tensor: ?

将tensor.shape[0]改成tf.shape(tensor)[0]

展开阅读

import os

import tensorflow as tf

import numpy as np

def mkdir(result_dir):

if not os.path.isdir(result_dir):

os.makedirs(result_dir)

return

def load_ckpt_initialize(checkpoint_dir, sess):

saver = tf.train.Saver()

sess.run(tf.global_variables_initializer())

ckpt = tf.train.get_checkpoint_state(checkpoint_dir)

if ckpt:

print('loaded ' + ckpt.model_checkpoint_path)

saver.restore(sess, ckpt.model_checkpoint_path)

return saver

def sobel_loss(img1,img2):

# sobel边缘检测后计算L1 loss,本来是为了防止模糊化,但似乎有一些问题,网络会去学到不存在的边缘

edge_1 = tf.math.abs(tf.image.sobel_edges(img1))

edge_2 = tf.math.abs(tf.image.sobel_edges(img2))

m_1 = tf.reduce_mean(edge_1)

m_2 = tf.reduce_mean(edge_2)

edge_bin_1 = tf.cast(edge_1>m_1, tf.float32)

edge_bin_2 = tf.cast(edge_2>m_2, tf.float32)

return tf.reduce_mean(tf.math.abs(edge_bin_1-edge_bin_2))

def load_image_to_memory(image_dir):

# 将image_dir下的所有图片加载到内存,存到一个list里

if image_dir[-1]!="/" and image_dir[-2:]!="\\":

print("invalid dir")

return -1

image_pack=[]

for i in os.listdir(image_dir):

image_tmp = plt.imread(image_dir + i)

image_pack.append(image_tmp)

return image_pack

def generate_batch(train_pics,batch_size,dark_pack,gt_pack):

"""

:argument

train_pics: 图片个数

batch_size: int

dark_pack: list, appended [h,w,c] 图片(nparray), train_pics 个元素

gt_pack: list, appended [h,w,c] 图片(nparray), train_pics个元素

:returns

nparray [batch_size, h,w,c] 随机截取的一个batch

"""

input_patches = []

gt_patches = []

for ind in np.random.permutation(train_pics)[:batch_size]:

imgrgb=dark_pack[ind]

imggt = gt_pack[ind]

W = imgrgb.shape[0]

H = imgrgb.shape[1]

ps = max(min(W//4,H//4),8)

xx = np.random.randint(0, W - ps)

yy = np.random.randint(0, H - ps)

img_feed_in = imgrgb[np.newaxis,:,:,:]

img_feed_gt = imggt[np.newaxis,:,:,:]

# random crop flip to generate patches

input_patch = img_feed_in[:, xx:xx + ps, yy:yy + ps, :]

gt_patch = img_feed_gt[:, xx * 2:xx * 2 + ps * 2, yy * 2:yy * 2 + ps * 2, :]

if np.random.randint(2, size=1)[0] == 1: # random flip

input_patch = np.flip(input_patch, axis=1)

gt_patch = np.flip(gt_patch, axis=1)

if np.random.randint(2, size=1)[0] == 1:

input_patch = np.flip(input_patch, axis=2)

gt_patch = np.flip(gt_patch, axis=2)

if np.random.randint(2, size=1)[0] == 1: # random transpose

input_patch = np.transpose(input_patch, (0, 2, 1, 3))

gt_patch = np.transpose(gt_patch, (0, 2, 1, 3))

input_patch = np.minimum(input_patch, 1.0)

input_patches.append(input_patch)

gt_patches.append(gt_patch)

return np.concatenate(input_patches,0),np.concatenate(gt_patches,0)

def cov(x,y):

# NHWC格式图像的协方差covariance

mshape = x.shape

#n,h,w,c

x_bar = tf.reduce_mean(x, axis=[1,2,3])

y_bar = tf.reduce_mean(y, axis=[1,2,3])

x_bar = tf.einsum("i,jkl->ijkl",x_bar,tf.ones_like(x[0,:,:,:]))

y_bar = tf.einsum("i,jkl->ijkl",y_bar,tf.ones_like(x[0,:,:,:]))

return tf.reduce_mean((x-x_bar)*(y-y_bar), [1,2,3])

def cumsum(xs):

# tensorflow version "np.cumsum"

values = tf.unstack(xs)

out = []

prev = tf.zeros_like(values[0])

for val in values:

s = prev + val

out.append(s)

prev = s

result = tf.stack(out)

return result

def piecewise_linear_fn(x, x1,x2,y1,y2):

# 分段线性插值

return tf.where(tf.logical_or(tf.less(x,x1), tf.greater(x,x2)),

tf.constant(0.0,shape=[1]),

y1 + (y2-y1)/(x2-x1)*(x-x1))

def count(matrix, minval, maxval):

# 计数 count(minval< matrix < maxval)

return tf.reduce_sum(tf.where( tf.logical_and(tf.greater(matrix, minval), tf.less(matrix, maxval)),

tf.ones_like(matrix, dtype=tf.float32),

tf.zeros_like(matrix, dtype=tf.float32)))

def generate_opt(loss):

lr = tf.placeholder(tf.float32)

opt = tf.train.AdamOptimizer(learning_rate=lr).minimize(loss)

return opt, lr

def generate_weights(shape):

weights = tf.Variable(tf.random_uniform(shape=shape,minval=0.0,maxval=1.0))

return weights展开阅读

RuntimeError: Input type (torch.cuda.FloatTensor) and weight type (torch.FloatTensor) should be the same

在初始化后的model后添加代码model.cuda()即可

RuntimeError: cuda runtime error (59) : device-side assert triggered at xxx

使用如下flag重新运行python脚本:

CUDA_LAUNCH_BLOCKING=1 python your_script.py并根据assertion fail信息进行debug

a if condition else b + c if condition else d == a if condition else (b + c if condition else d)因此一定要在if else表达式外面加括号

Key error 是因为字典的key找不到引起的

pytorch默认复制索引,因此用一个tensor记录历史值不能直接用等号,要用clone()

a=torch.zeros((2,3))

a_old = a[1,1] # 0

a[1,1] = 1

print(a_old) # 1